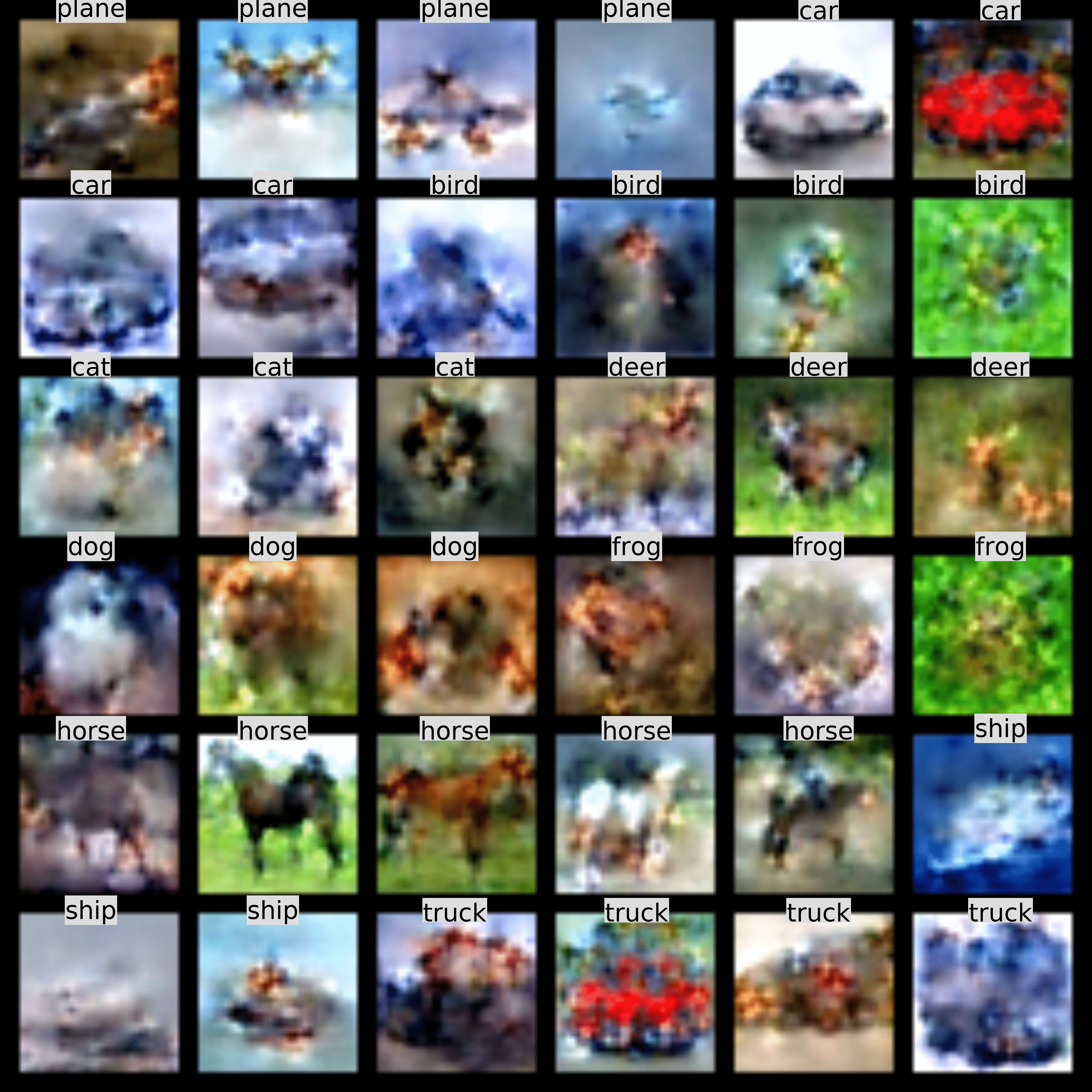

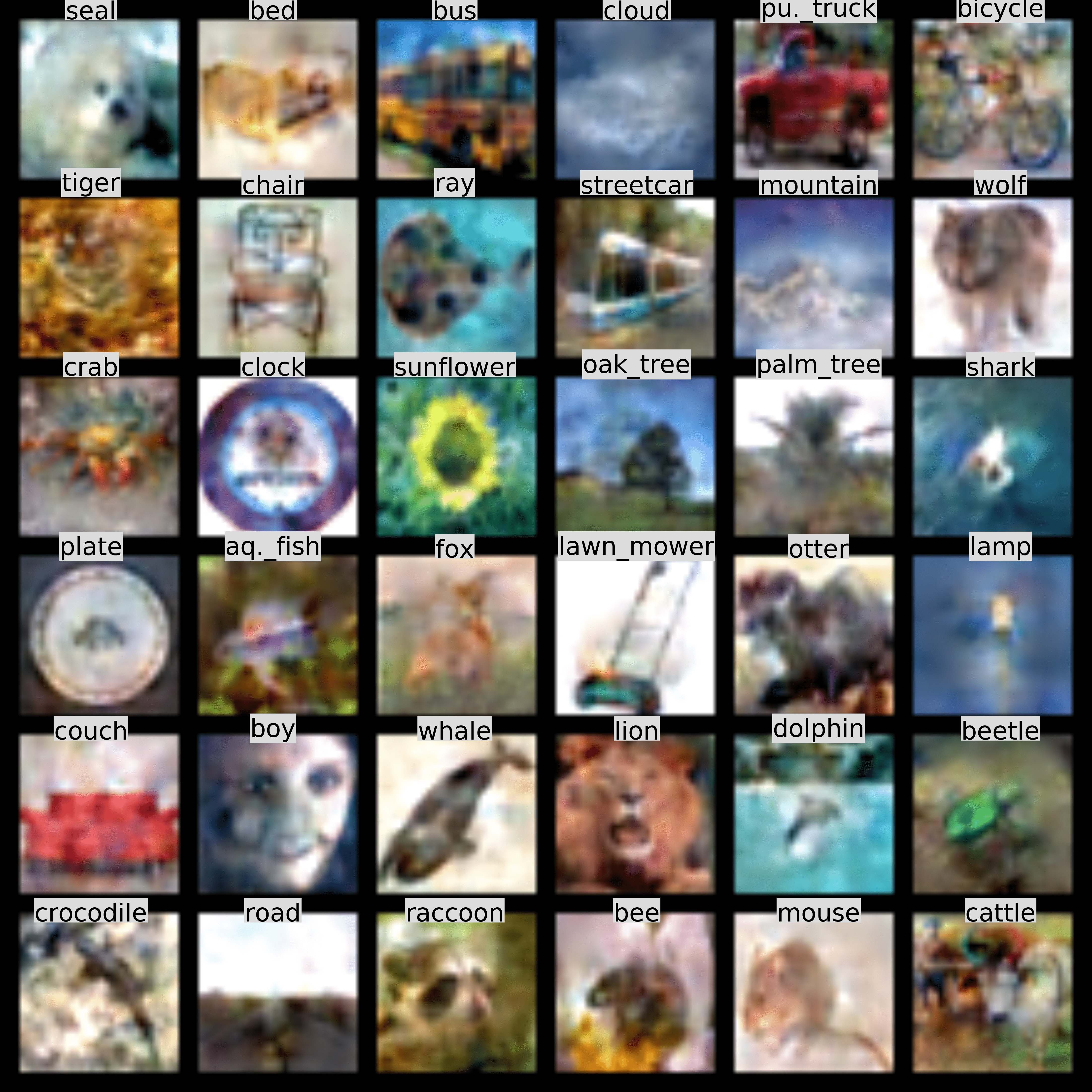

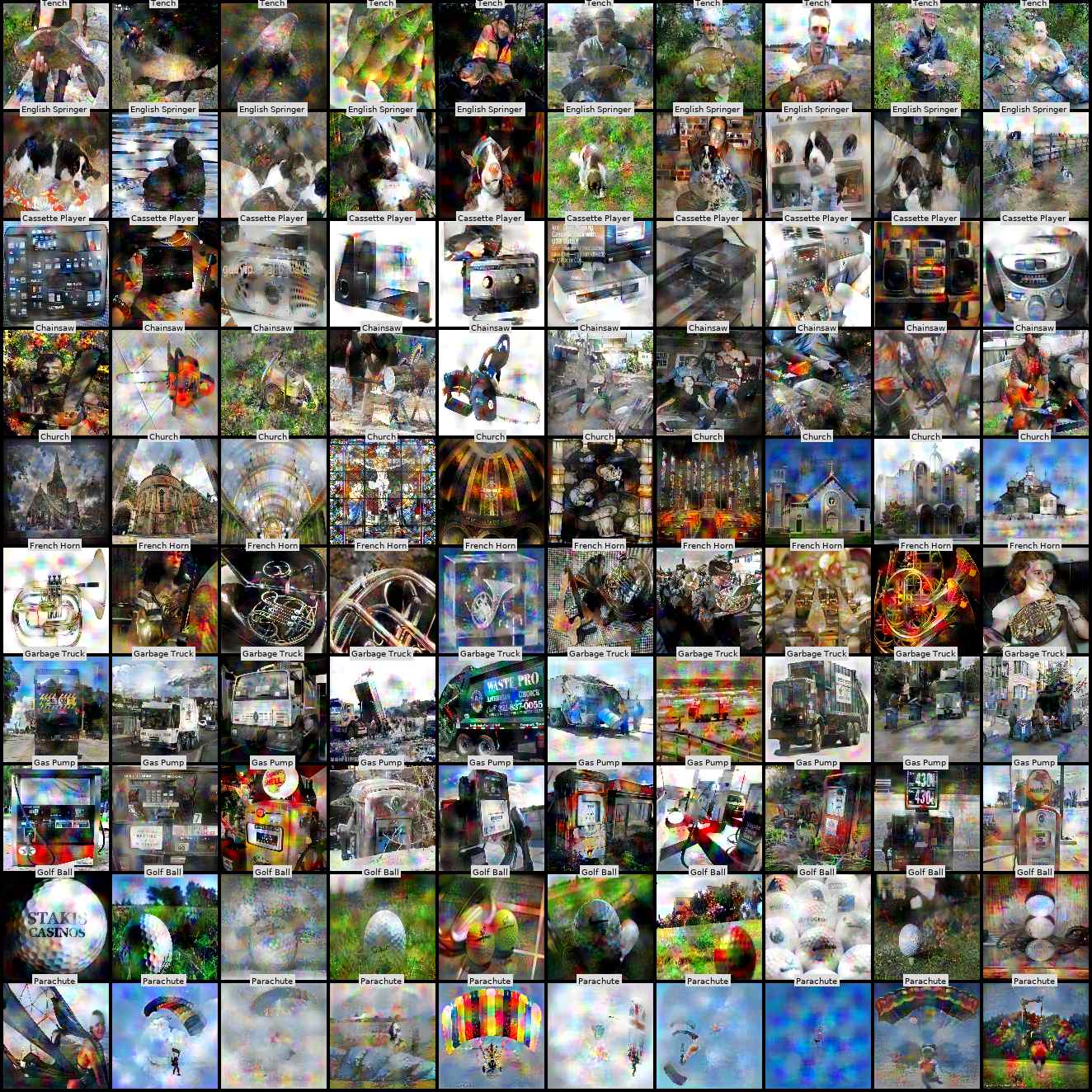

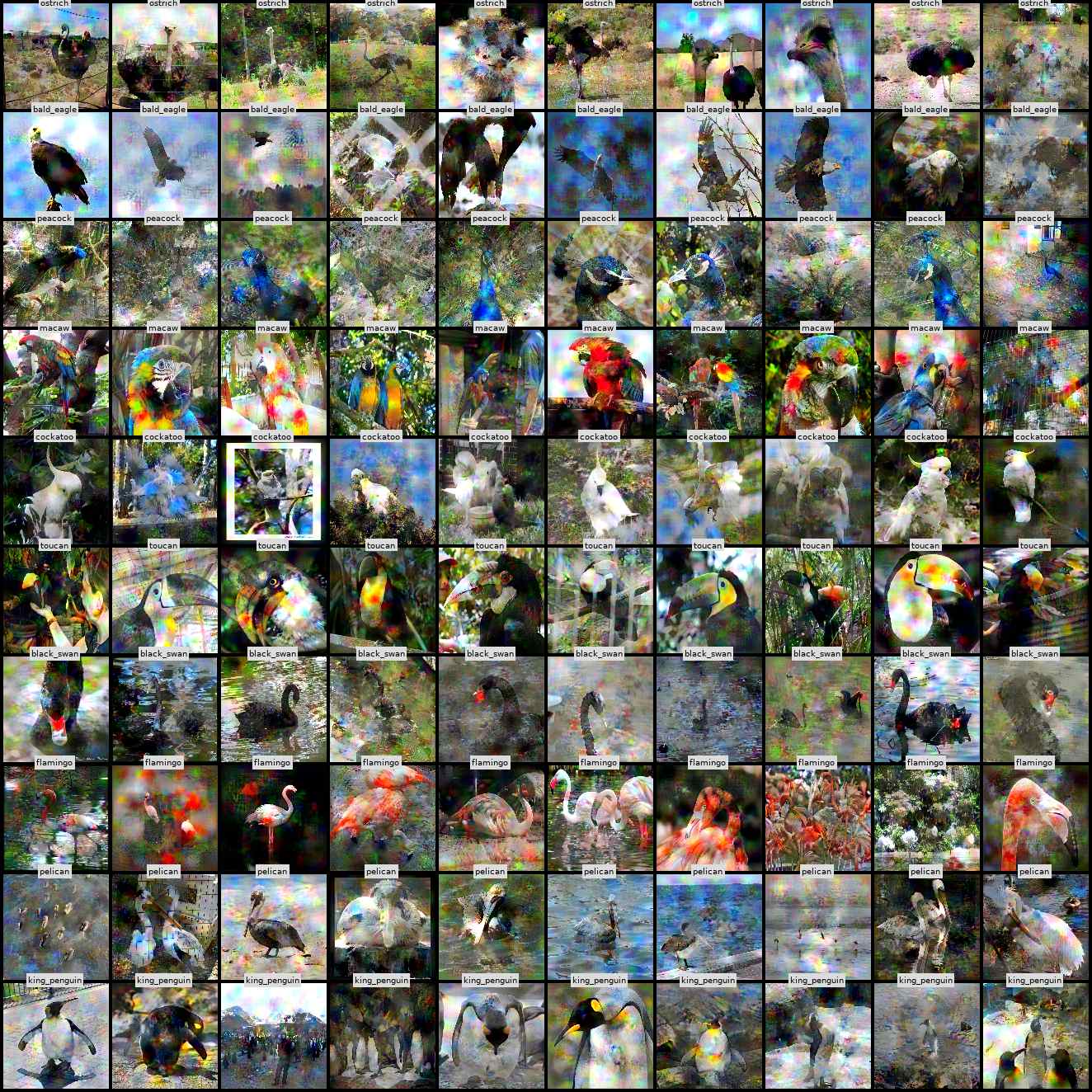

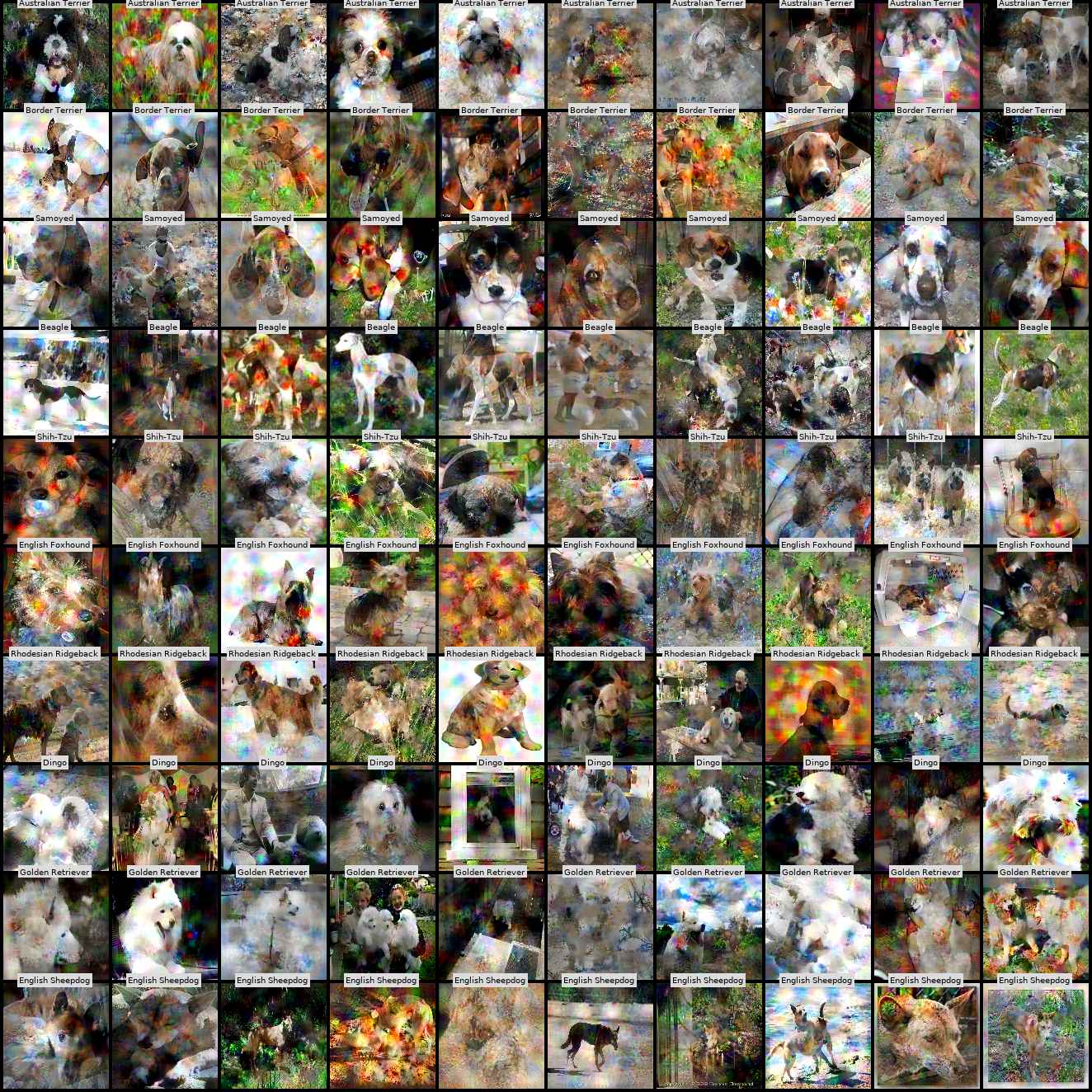

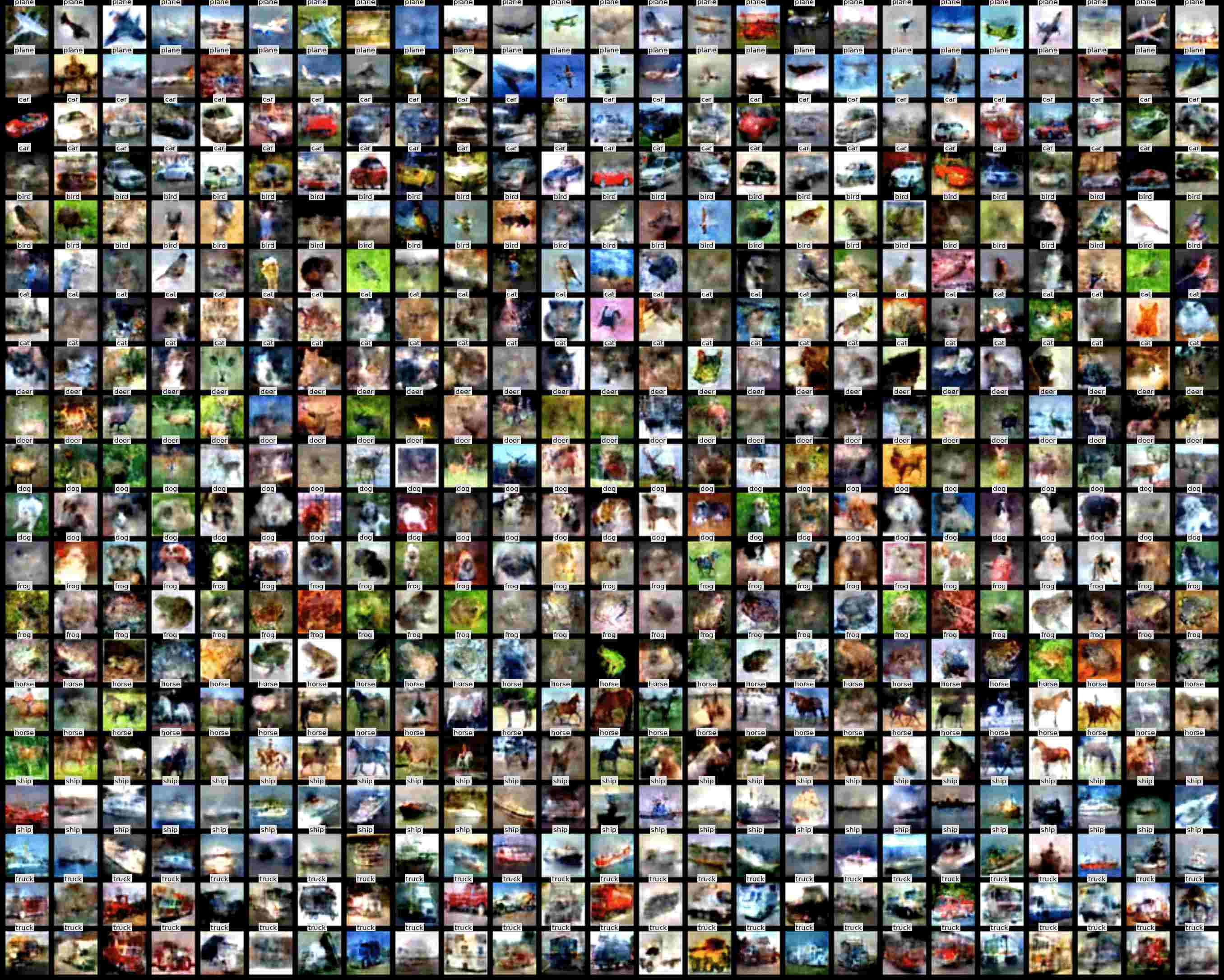

Researchers have long tried to minimize training costs in deep learning while maintaining strong generalization across diverse datasets. Emerging research on dataset distillation aims to reduce training costs by creating a small synthetic set that contains the information of a larger real dataset and ultimately achieves test accuracy equivalent to a model trained on the whole dataset. Unfortunately, the synthetic data generated by previous methods are not guaranteed to distribute and discriminate as well as the original training data, and they incur significant computational costs. Despite promising results, there still exists a significant performance gap between models trained on condensed synthetic sets and those trained on the whole dataset.

In this paper, we address these challenges using efficient Dataset Distillation with Attention Matching (DataDAM), achieving state-of-the-art performance while reducing training costs. Specifically, we learn synthetic images by matching the spatial attention maps of real and synthetic data generated by different layers within a family of randomly initialized neural networks. Our method outperforms the prior methods on several datasets, including CIFAR10/100, TinyImageNet, ImageNet-1K, and subsets of ImageNet-1K across most of the settings, and achieves improvements of up to 6.5% and 4.1% on CIFAR100 and ImageNet-1K, respectively. We also show that our high-quality distilled images have practical benefits for downstream applications, such as continual learning and neural architecture search.

Dataset Distillation with Attention Matching (DataDAM) is meticulously designed to efficiently capture the most crucial and distinctive information from real datasets. This method consists of two essential components: Spatial Attention Matching (SAM) and Last-Layer Feature Alignment. SAM aligns attention maps, while the Last-Layer Feature Alignment synchronizes embedded representations from different layers in randomly initialized neural networks. DataDAM is a streamlined and effective approach to distilling essential dataset insights.

@InProceedings{Sajedi_2023_ICCV,

author = {Sajedi, Ahmad and Khaki, Samir and Amjadian, Ehsan and Liu, Lucy Z. and Lawryshyn, Yuri A. and Plataniotis, Konstantinos N.},

title = {DataDAM: Efficient Dataset Distillation with Attention Matching},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {17097-17107}

}